Microservices testing

Hello! I am Yevhen Garmash, Automation QA Engineer at Vector Software.

In this article, I will share my experience of automating the testing of a web application that is built on a microservice architecture and demonstrate an example of building multi-level testing.

This article will be useful for IT professionals who want to master microservices testing, moving from monolithic architectures to more flexible and scalable solutions, even if they do not yet have much experience in this area.

We were approached by a client with a request to transfer a monolithic web application to a microservice one. This was due to the need to adapt the application to different deployment configurations so that certain functions could be turned on or off according to customer requirements, depending on their localization. In fact, the application allowed users to buy and sell assets, exchange messages, and perform other standard functions. The client tested his application using a set of E2E tests. As a test environment, he used the Jenkins test agent, where the application was deployed, and the tests were executed in a separate Jenkins pipeline.

Over time, the development team began to rewrite the application, and as a result, the former monolith became a set of microservices.

Microservice application architecture

- The front-end receives commands from users, such as registering a new user, purchasing assets, and sending messages, and sends corresponding requests to microservices B, C, and D.

- Microservice A is the main service that works as a central repository of configuration data and stores configuration data in RAM for quick access.

- Microservice B is responsible for purchasing assets by users and storing information about these assets in the PostgreSQL database. It also receives configuration data from Microservice A to configure its operation. It also communicates with Microservice D via Apache Kafka to synchronize data.

- Microservice С is responsible for sending private messages between users. It also receives configuration data from Microservice A and communicates with Microservice D via Apache Kafka for message/user synchronization.

- Microservice D is a service that manages users and stores all their data in a database.

Testing:

The task of the QA team was to ensure the testing of the application. However, when working with existing E2E scenarios, there were issues that needed to be resolved.

- Impossibility of early testing:

E2E tests are not effective for early testing of microservices, especially when some of them are still in the migration process and the entire application cannot be deployed to execute them.

- Duration of execution:

E2E tests took over an hour to run, which slowed down the development process.

- Difficulty locating the source of the defect:

E2E tests tested the entire system as a whole, so it was difficult to find the source of the problem.

For example, if microservices use each other’s APIs, problems in the shared interface are difficult to detect because E2E tests test broader interaction scenarios rather than individual requests. Also, when using Apache Kafka, there is no guarantee of message delivery, which makes it difficult to detect problems.

- Test data management:

In the microservice architecture, there are problems with test data management due to the use of different databases (Microservice B + PostgreSQL, Microservice D + SQL).

For example, running E2E tests in parallel that modify the configuration in the database can cause a state conflict between the tests, causing unpredictable results due to simultaneous changes.

- Dependence on other components:

Using Apache Kafka makes tests less predictable due to the asynchronous nature of message processing. This can cause a cascading failure of the entire test suite, making it difficult to test the target functionality.

Decision:

To solve these problems, the QA team decided to divide testing into different levels. This allowed us to start testing as quickly as possible, even as the microservices were rewritten in stages.

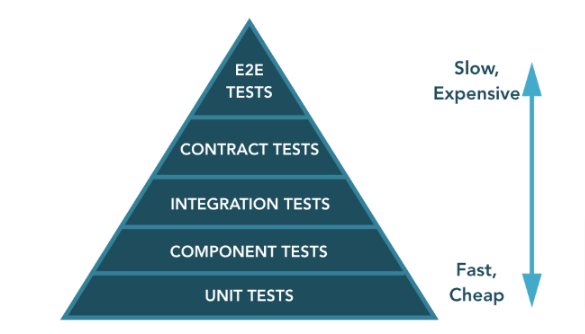

Here is an example of such a testing pyramid:

- Unit testing

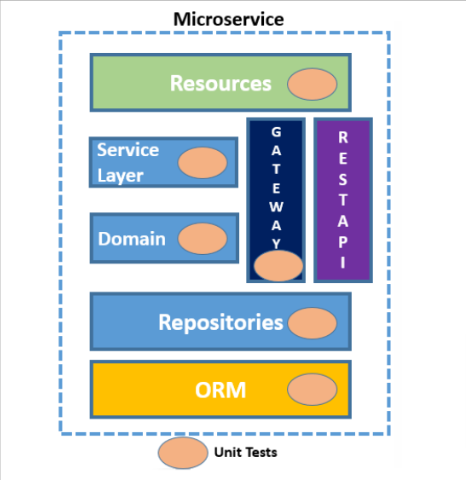

The team of Front-end and Back-end developers wrote the necessary number of Unit tests to cover the code. SonarQube was also integrated into CI/CD to analyze code quality. Unit tests were run on Pipeline before merging into the master branch. This is important because Unit tests are the lowest level in the testing pyramid and ensure code stability.

- Component testing

The next step is component testing of microservices in isolation, which occupies the second level of the testing pyramid. This is a simple, fast and reliable way to test each microservice individually.

We used Docker to set up an environment where every component of the system, including test tools, was deployed in its own Linux container.

Since our application interacts with other microservices and databases, we used WireMock.Net to create virtual microservices and In-Memory Database Provider to simulate the database, thus providing complete isolation and reproduction of the necessary dependencies without the need for real external services. As part of the continuous integration (CI) process, Component tests were performed immediately after Unit tests for each service.

Advantages:

- Identifying errors at an early stage

- Isolation of test environments:

- Test performance speed

- Integration testing

A key aspect of integration testing in a microservices architecture is verifying the correct interaction between different system components, such as application services, databases, and external services.

Integration testing is considered the third level of the testing pyramid because of its importance in ensuring the correct interaction between components that function together properly, without failures in their interaction.

Let’s say we want to test how Microservice B interacts with the database.

For this, we create a test environment based on Linux containers, where we can deploy the service itself, the database, Kafka and the test system.

The testbed includes a number of tests that test this interaction and also uses a Mock Service ( WireMock.Net ) to simulate the behavior of microservice A.

This kind of integration testing process is done in the context of continuous integration (CI), usually right after unit tests, and we used it to test all the microservices in our system.

Testing procedure:

- Preparation of prerequisites: The integration test creates the necessary test data in the PostgreSQL database.

- Sending a request to Microservice B: The test sends an HTTP request to Microservice B to create an asset.

- Getting configuration from Microservice A : Microservice B sends a request to Microservice A to get the required configuration, and the Mock Service in Microservice A intercepts this request and returns a pre-prepared configuration for the test.

- Creating an entity in the database : Microservice B makes a request to the database to create an entity

- Sending a message to Kafka : Microservice B sends the message to Kafka, where it is stored.

- Validation of results : A check is made whether the entity was successfully created in the database and whether the message was successfully delivered to Kafka.

The above approach has the following advantages:

- Quick feedback

- Isolation of environments

- Test environment deployment speed

- Communication jams with other services

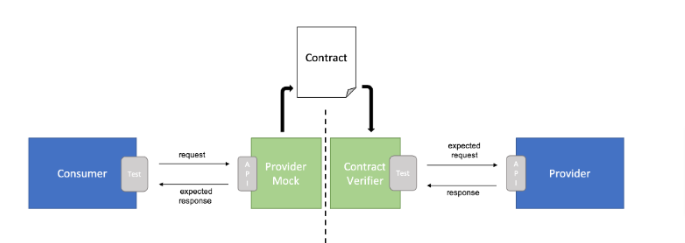

- Contract testing

After completion of integration testing, it is necessary to check how microservices interact and transfer data between themselves.

The next step in the testing pyramid is the application of contract testing. This type of tests allows you to guarantee the correctness of interaction and compliance with agreed interfaces and agreements between services.

According to the architecture, there are two ways of exchanging data in our application:

- Using the REST API

- Using messages via Apache Kafka

Contract testing using REST:

Consider the communication between Micreservice B and Micreservice A:

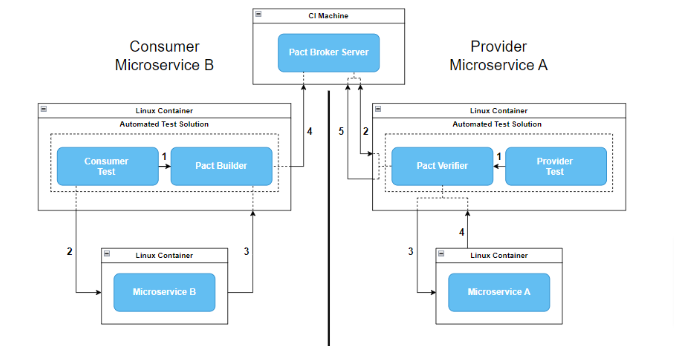

- First, we create Linux-based Docker images for testing and services.

- Pact Broker Server , which stores and manages contracts, is installed on the Jenkins agent.

- the Pact.net framework to generate contracts .

In this case, we use a non-standard approach to contract testing, where the contract is generated by a real microservice.

Consumer Microservice B:

- Pact Builder call: Consumer test calls Pact Builder, in which we explicitly indicate which HTTP request should be intercepted (Endpoint, Http Method, Query) and the response we expect from Microservice A.

- Sending an HTTP request : Consumer test sends an HTTP request to Microservice B.

- HTTP request interception: Microservice B sends an HTTP request that is intercepted by Pact if this request matches the previously specified request in point 1.

- Contract validation and publication: In case of successful validation, the test publishes the contract to the Pact Broker Server.

Provider Microservice A:

- Pact Verifier call: Provider test calls Pact Verifier, where the Pact Broker URL of the server and the required Provider name in the contract generated by the Consumer are indicated.

- Obtaining the contract: Pact Verifier via HTTP request obtains the required contract.

- Sending an HTTP request : Pact sends the request specified by the Consumer to Microservice A.

- Request Processing: After receiving an HTTP request, Microservice A returns a response to Pact Verifier.

- Validation and publication of the result: Pact checks the response received from Microservice A with the response specified in the contract by Consumer and sends the result of the check to the Pact Broker Server.

Contract testing using messages:

We tested between Microservice B and Microservice D.

- Use of Pact.net: Pact.net was used to implement the contract tests, which provides the possibility of testing the integration of services through the exchange of messages.

- Microservice B: The test solution for Microservice B was implemented in a Linux container. This solution is responsible for generating contract messages and publishing them to the Pact Broker Server.

- Microservice D: For Microservice D, a test solution was also deployed in a Linux container. In addition, Kafka was raised in a Linux container.

- Purpose of the test solution: The main purpose of the test solution was to load a contract and publish messages to Kafka.

- Sequence of test execution: In the current project, contract tests were executed after the execution of integration tests as part of CI (Continuous Integration).

Consumer Microservice B:

- Pact Builder Call: Consumer Test calls Pact Builder to create a message contract for Microservice D.

- Publishing the contract: Pact Builder publishes the contract to the Pact Broker Server using HTTP.

Provider Microservice D:

- Call Pact Verifier : Provider Test calls Pact Verifier which contains Pact Broker server configuration data.

- Obtaining the contract: Pact Verifier via HTTP request obtains the required contract with the messages.

- Call Kafka Producer : Provider Test calls Kafka Producer which is responsible for sending messages to Kafka.

- Sending messages to Kafka: Kafka Producer sends the message specified in the contract to Kafka.

- Receiving messages: Kafka Listener, being subscribed to a specific topic, receives messages.

- Message Validation: Pact Verifier sends the received message for verification with the expected result.

- Publication of results: Pact Verifier sends verification results to the Pact Broker server.

Advantages:

- Ensuring compatibility between services

- Quick detection of changes

- Reduction of dependencies between services

- Test environment deployment speed

- E2E testing

Even with the multi-level testing pyramid, E2E testing remains an important step in testing microservices applications. After implementing the multi-level pyramid, we reduced the number of E2E tests, which led to the following advantages:

- Reducing the duration of tests: Reducing the number of E2E tests leads to faster feedback and ensuring that the entire system meets the customer’s requirements.

- Lower test maintenance costs: E2E tests take a lot of time to execute, verify, and debug. When updating the functionality or structure of the system, they have to be reviewed and changed, which requires additional resources.

- Reduced test environment maintenance costs: Each unique application configuration may require a separate test environment configured for specific requirements and dependencies. Therefore, deploying, configuring, and managing such environments require significantly more resources and time. By reducing the scope of E2E tests and focusing on lower-level tests, it is possible to optimize the use of test environments, make them more versatile, and reduce the time and resources needed to prepare and maintain them.

Conclusion:

Testing microservices is important to ensure the quality and reliability of web applications. Due to the distributed nature of the microservice architecture, the testing process requires an integrated approach.

We use a multi-level testing pyramid to ensure the stability and high quality of the system. This allows us to control risks at various stages of software product development and implementation.

Advantages:

- Multi-level approach: Testing at different levels ensures that every part of the system is working properly.

- Component isolation: Testing microservices in isolation helps to accurately identify and resolve problems without affecting other parts of the system.

- Speed and efficiency: Test automation, including the use of Docker, facilitates rapid setup of test environments and accelerates the process of development and implementation of changes.

- Testing flexibility: The ability to use stubs for external dependencies and services allows you to test the target service, regardless of the current state of development or the availability of the services it communicates with.

Disadvantages :

- Management complexity: Managing a multi-level testing process can be difficult, especially in large systems with many microservices, and may require additional effort to coordinate and analyze the results.

- Setup complexity: Setting up Docker containers for each microservice and configuring Kafka can be complex and time-consuming, especially for large and complex systems.

- Need for specialized knowledge: Effective use of technologies such as Docker, Jenkins, and PactNet requires a certain level of technical expertise and experience. This means that the testing team will have to be trained to master these tools and integrate them into the testing process, which can increase the time and cost of training staff.